January 04, 2022

An Old WSUS Tip

This is a copy of an article from tecknowledgebase.com written by Tom Menezes. While this blog started as a way for me to jot down linux based scripts, tips, tricks, etc, its also a place for me to save a bunch of random stuff for my own personal reference. This particular article is one that I’ve referenced frequently over the years when managing WSUS servers. The orginal article (Archive Link) recently went offline so I wanted to make a copy for myself and for others who have bookmarked this in the past and need to still reference this.

Note: The article has slightly been reformatted when copying

How to identify and decline superseded updates in WSUS

Although you can use the server cleanup wizard, you may want from time to time to clean manually all superseded updates to clean your WSUS infrastructure.

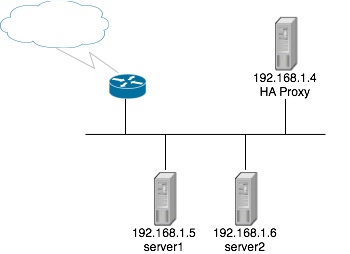

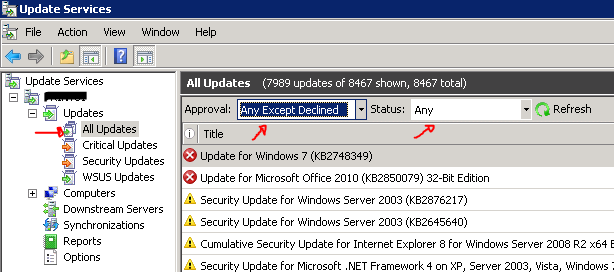

Open the Windows Update Services MMC then select the All Updates View as you can see below.

Set the display to show the Approval status of ‘Any except Declined’ with a Status of ‘Any’, then click Refresh.

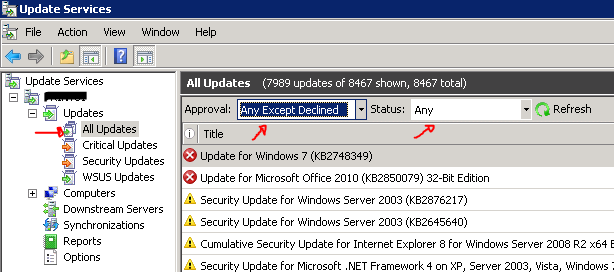

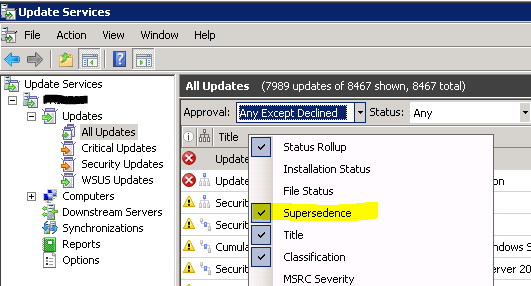

Right-click in the title bar and Enable the ‘Supersedence’ column to make it visible.

Select and Decline the Superseded Updates

The updates to be declined have one of two particular flowchart symbols for their updates pictured in the attached image. Select the correct updates and Decline them by either right-clicking the selected updates and clicking decline or by pressing the decline button in the action pane.

Now there are 4 options:

- No icon: update doesn’t supersede another one nor is it superseded by an update.

Blue square on top: this update supersedes another update, these updates you do not want to clean…!!

Blue square on top: this update supersedes another update, these updates you do not want to clean…!! Blue square in the middle: this update has been superseded by another update and superseded another update as well, this is an example of an update you may want to clean (decline).

Blue square in the middle: this update has been superseded by another update and superseded another update as well, this is an example of an update you may want to clean (decline). Blue square in the right below corner: this update has been superseded by another update, this is an example of an update you may want to clean (decline).

Blue square in the right below corner: this update has been superseded by another update, this is an example of an update you may want to clean (decline).

Run the Server Cleanup Wizard

Make sure you have all the options selected in the wizard and let it run. It will delete the files from the declined updates.

OPTIONAL: Automatic Approval Options

In the automatic approval options, under the advanced tab, there is an option to automatically approve update revisions for previously approved updates and subsequentially decline the now expired updates. I suggest you select them.

Note: Always verify that all superseding updates are approved before doing this operation!

August 30, 2021

Installing NodeJS and NPM using the Precompiled Binaries

This is by far the easiest way to install NodeJS and NPM on modern linux distros. The nice folks at NodeJS have done all the work for you!

However, because it is precompiled you may run into some issues but I personally have not experienced any yet. I have tested this on Centos 7 and 8 as well as other RHEL based distros such as Rocky Linux and AlmaLinux. I have also tried this on Ubuntu, but your mileage may vary.

Installing the latest cutting edge node

cd /usr/local/src

latestnode=$(curl https://nodejs.org/dist/latest/ 2>1 | grep linux | sed -e 's/<[^>]*>//g' | awk '{print $1 }' | grep $([[ $(getconf LONG_BIT) = 64 ]] && echo x64 || echo x86) | grep tar.gz)

wget https://nodejs.org/dist/latest/$latestnode

tar xzf $latestnode

cd $(echo $latestnode | sed -e 's/.tar.gz//g')

sudo rsync -Pav {bin,include,lib,share} /usr/

We first start by switching into our src directory. I like doing this as having random source files spread out in /root and /home can be awfully messy. The very next line is a fancy wget statement that curls the nodejs latest folder (from their web), grep’s for linux, strips out the html and then greps for whichever result matches your architecture and then downloads it! Pretty fancy! Following this we cd into the newly untarred directory and simply rsync the binary and library files. Thats it! You can verify by running the following:

If its copied correctly, those commands will not error out.

Installing LTS version of Node*

Coming Soon!

March 21, 2014

For the longest time I have wanted to be able to support multiple versions of PHP within apache and cPanel but I wasn’t able to find any sort of guide on this. After searching for a while, I did come across ntphp and these guys did a really, really great job! If you are looking for a quick and simple way to get multiple versions of PHP that also comes with a cPanel plugin, check these guys out! However, I like to do things myself and come up with my own way of doing things. So here is my guide to installing multiple versions of PHP in cPanel!

A couple Things to Note

- This will only work with suPHP (Not DSO or mod_ruid2)

- This does not sync up with EasyApache or rebuild with EasyApache

- You probably shouldn’t build the same version as the default. If your default PHP version is 5.4, then don’t build php5.4 via this method. I don’t think anything will happen but I would personally avoid it.

Installing PHP 5.2

Php 5.2 is slightly different because it requires a patch in order to build correctly. If you don’t care about 5.2, skip down to 5.3, 5.4, and 5.5. Recently, multiple versions of PHP were labeled with a vulnerability and its recommended you avoid those versions. All versions of PHP 5.2 were listed because 5.2 is officially end of life and has not and will not be updated. Basically, you should NOT be using this version of PHP.

With that being said, I have had some clients that did require this and had not had a chance to upgrade their site code. This method below is perfect for this scenario! You can have PHP 5.5 as the default and install PHP 5.2 for that 1 client that refuses to upgrade their 4 year old code. Lets take a look at the process!

Download and Extract It

Lets start by downloading it! We have to go to the PHP museum for this:

su -

cd /usr/local/src/

wget -O php-5.2.17.tar.gz http://museum.php.net/php5/php-5.2.17.tar.gz

tar -xvzf php-5.2.17.tar.gz

cd php-5.2.17

Build It

The easiest way to configure this is to copy the config options from the current working PHP (the one you built in EasyApache):

$(php -i | grep configure | sed s/\'//g | cut -d" " -f4- | sed "s:--prefix=/usr/local:--prefix=/usr/local/php52:")

Quick note. The $() surrounding the command basically takes the output and runs it as a command within the shell. If you are running litespeed (but using apache), copy the command above (without the $()) and remove --with-litespeed. If you don’t do this it will not build php-cgi which is required for suPHP. If you get errors about sqlite not being shared, you can simply remove it (If you don’t need it of course) by appending the following to the command --without-pdo-sqlite --without-sqlite

Next we need to add the patch for php 5.2 for it to build correctly:

wget https://mail.gnome.org/archives/xml/2012-August/txtbgxGXAvz4N.txt

patch -p0 -b < txtbgxGXAvz4N.txt

Then make it

If you look in /usr/local/php52/bin you should see a binary file called ‘php-cgi’. If you do not then you will need to go back and run this from the “build it” section. Append --with-apxs2=/usr/local/apache/bin/apxs to the end of the configure line. Apxs is located in /usr/local/apache/bin/ by default in cpanel but it never hurts to verify. Then you can go back and run make && make install. You should now see the php-cgi binary.

Add It To Apache

Generate the PHP config:

cat > /usr/local/apache/conf/php52.conf << EOF

AddType application/x-httpd-php52 .php52 .php

<Directory />

suPHP_AddHandler application/x-httpd-php52

</Directory>

EOF

And add the include to apache:

echo "Include /usr/local/apache/conf/php52.conf" >> /usr/local/apache/conf/includes/pre_main_global.conf

Then Add the Handler to suPHP’s config:

sed -i '/\;Handler for php-scripts/a application\/x-httpd-php52=\"php\:\/usr\/local\/php52\/bin\/php-cgi\"' /opt/suphp/etc/suphp.conf

Copy a default php.ini into 5.2

cp /usr/local/cpanel/scripts/php.ini /usr/local/php52/lib/

And restart Apache!

Configure Domain To Use This PHP

Add the following /home/username/public_html/.htaccess

AddType application/x-httpd-php52 php

And you are done! All of your php binaries and libraries are located at /usr/local/php52/. The php binary is /usr/local/php52/bin/php and pecl/pear is located at /usr/local/php52/bin/pecl. Your php.ini will be reachable from here /usr/local/php52/lib/php.ini. Enjoy!

Installing PHP 5.3, 5.4, 5.5

These do not require a patch and the setup is exactly the same. However, please READ EACH COMMAND CAREFULLY. Im going to write this guide for PHP 5.5 but if you want to use this for php 5.3 or 5.4, please make sure you read every command and replace all applicable strings with your version. You’ll want to replace all instances of 5.5.10 and all instances of php55 with your respective version. Lets get going!

Download and Extract It

Lets start by downloading it:

su -

cd /usr/local/src/

wget -O php-5.5.10.tar.gz http://us1.php.net/get/php-5.5.10.tar.gz/from/this/mirror

tar -xvzf php-5.5.10.tar.gz

cd php-5.5.10

Build It

The easiest way to configure this is to copy the config options from the current working PHP (the one you built in EasyApache):

$(php -i | grep configure | sed s/\'//g | cut -d" " -f4- | sed "s:--prefix=/usr/local:--prefix=/usr/local/php55:")

Quick note. The $() surrounding the command basically takes the output and runs it as its own command within the shell. If you are running litespeed (but using apache), copy the command above and remove --with-litespeed. If you don’t do this it will not build php-cgi which is required for suPHP. If you get errors about sqlite not being shared, you can simply remove it (If you don’t need it of course) by appending the following to the command --without-pdo-sqlite --without-sqlite

Then we make it and install:

If you look in /usr/local/php55/bin you should see a binary file called ‘php-cgi’. If you do not then you will need to go back and run this from the “build it” section. Append --with-apxs2=/usr/local/apache/bin/apxs to the end of the configure line. Apxs is located in /usr/local/apache/bin/ by default in cpanel but it never hurts to verify first. Then you can go back and run make && make install. You can should now see the php-cgi binary.

Add It To Apache

Generate the PHP config:

cat > /usr/local/apache/conf/php55.conf << EOF

AddType application/x-httpd-php55 .php55 .php

<Directory />

suPHP_AddHandler application/x-httpd-php55

</Directory>

EOF

And add the include to Apache:

echo "Include /usr/local/apache/conf/php55.conf" >> /usr/local/apache/conf/includes/pre_main_global.conf

Add the Handler to suPHP:

sed -i '/\;Handler for php-scripts/a application\/x-httpd-php55=\"php\:\/usr\/local\/php55\/bin\/php-cgi\"' /opt/suphp/etc/suphp.conf

Copy a default php.ini:

cp /usr/local/cpanel/scripts/php.ini /usr/local/php55/lib/

And restart Apache!

Configure Domain To Use This PHP

Add the following /home/username/public_html/.htaccess

AddType application/x-httpd-php55 php

And you are done! All of your php binaries and libraries are located at /usr/local/php55/. The php binary is /usr/local/php55/bin/php and pecl/pear is located at /usr/local/php55/bin/pecl. Your php.ini will be reachable from here /usr/local/php55/lib/php.ini. Enjoy!

Wrap Up

So that wasn’t too hard! You should now have a fully functional php install for your clients! Another quick note, this doesn’t work with litespeed. I’ll be writing up a litespeed specific version in a little while. I also plan on creating a WHM plugin for this to quickly generate new php versions (And even get fancy like having several instances in the same branch). If that ever happens I’ll post about it here. If you have any questions, hit me up in the comments or on twitter. I can’t guarantee I’ll have the answers but I’ll give it my best!

January 31, 2014

Python is built right into CentOS but if you are using CentOS 5 you are stuck with python2.4. This is pretty old and we are getting to the point where many plugins simply will not work with it any longer. You may be on CentOS 6 which is better but still behind. This will allow you to install python 2.7 or 3.0 without removing built in to CentOS. I havent tested it but there is no reason this will not work with Ubuntu or Debian. To access this you will have to launch the program by typing “python2.7” or “python3” rather than just “python”. You can then put /usr/local/python2.7/bin/python2.7 in the shebang of the script to have it called automatically.

Another note, many admins prefer not to even mess with it this way as Yum relies on python to do its work. This method will install python2.7 or python3 without breaking yum. Lets get started!

PreReq

If you havent already, go ahead and install the development tools

yum groupinstall "Development Tools"

You will also need the zlib developer package and openssl-devel

yum install zlib-devel openssl-devel

Installing Python 2.7

cd /usr/local/src

wget http://python.org/ftp/python/2.7.9/Python-2.7.9.tgz

tar xzf Python-2.7.9.tgz

cd Python-2.7.9

./configure --prefix=/usr/local/python2.7 --with-libs --with-threads --enable-shared

make

make altinstall

This will install the python2.7 binaries and library files to /usr/local/python2.7/. This will keep everything organized and out of the way of the base python installation. Next we will add the lib folder to the library path and update our global PATH upon login

echo 'PATH=$PATH:/usr/local/python2.7/bin' > /etc/profile.d/python2.7-path.sh

echo 'export PATH' >> /etc/profile.d/python2.7-path.sh

echo "/usr/local/python2.7/lib" > /etc/ld.so.conf.d/opt-python2.7.conf

We can now run the following to update the servers library cache and our own session

ldconfig

source /etc/profile.d/python2.7-path.sh

Its also a good idea to install setup tools because who does not like to easy_install?

wget https://bitbucket.org/pypa/setuptools/raw/bootstrap/ez_setup.py -O - | python2.7

Thats it! Python 2.7 can be ran by typing “python2.7” and easy_install can be ran by typing “easy_install-2.7”. While you are here, go ahead and install pip. To anwer the previous question of “Who does not like to easy_install?”. Those who pip install!!

This can now be called by typing “pip-2.7” and you should have a fully complete setup!

Install Python3

This is virtually the same method as above. You can call up this version of python by typing “python3.4” or simply “python3”

cd /usr/local/src

wget http://python.org/ftp/python/3.4.2/Python-3.4.2.tgz

tar xzf Python-3.4.2.tgz

cd Python-3.4.2

./configure --prefix=/usr/local/python3.4 --with-libs --with-threads --enable-shared

make

make altinstall

This will install the python3.4 binaries and library files to /usr/local/python3.4/. This will keep everything organized and out of the way of the base python installation. Next we will add the lib folder to the library path and update our global PATH upon login

echo 'PATH=$PATH:/usr/local/python3.4/bin' > /etc/profile.d/python3.4-path.sh

echo 'export PATH' >> /etc/profile.d/python3.4-path.sh

echo "/usr/local/python3.4/lib" > /etc/ld.so.conf.d/opt-python3.4.conf

We can now run the following to update the servers library cache and our own session

ldconfig

source /etc/profile.d/python3.4-path.sh

For easy_install you had to use distribute but now you can use the same setup tools as before!

wget https://bitbucket.org/pypa/setuptools/raw/bootstrap/ez_setup.py -O - | python3.4

Then install pip

And thats it boys and girls!

January 08, 2014

You can easily install NodeJS with NPM via your default repository. If it isnt in there, due to the popularity, its likely in one of the many community repositories such as the EPEL and RPMForge repositories, but unfortunately they are rarely up to date. Luckily, getting the latest version of node and npm could not be easier! Lets get started by grabbing some dependencies:

Prerequisites

Ubuntu

sudo apt-get install g++ curl libssl-dev apache2-utils

CentOS

You can just grab all the development tools

yum -y groupinstall "Development Tools"

Installing the Precompiled Binary

This is by far the easiest way as there is no compiling what so ever, the nice folks at NodeJS have done all that for you! However, because it is precompiled you may run into some issues but I personally havent experienced any yet.

cd /usr/local/src

wget http://nodejs.org/dist/latest/$(curl http://nodejs.org/dist/latest/ 2>1 | grep linux | sed -e 's/<[^>]*>//g' | awk '{print $1 }' | grep $([[ $(getconf LONG_BIT) = 64 ]] && echo x64 || echo x86))

cd node-*

rsync -Pav {bin,lib,share} /usr/

We first start by switching into our src directory. I like doing this as having random source files spread out in /root and /home can be awfully messy. The very next line is a fancy wget statement that curls the nodejs latest folder, grep’s for linux, strips out the html and then greps for whichever result matches your architecture and then downloads it! Pretty fancy! Followinng this we cd into the newly untarred directory and simply rsync the binary and library files. Thats it! You can verify by running the following:

If its copied correctly, those commands will not error out.

But I Like Compiling!!!

Well fine then, luckily its just as easy!

cd /usr/local/src

git clone git://github.com/ry/node.git

cd node

./configure

make

make install

And thats that! Enjoy Node!!

August 29, 2013

For whatever reason I always had trouble installing the ImageMagick RPM’s from their website. So when I got a good guide written down I figured Id share it with everyone. Here’s how to install the latest and greatest ImagickMagic rpm’s to Centos 5 and Centos 6 and should be compatible with most cpanel systems!

Prerequisites

Lets download all of the development tools and prerequisites

yum -y install gcc gcc-c++ make

yum -y install rpmdevtool libtool-ltdl-devel freetype-devel ghostscript-devel libwmf-devel lcms-devel bzip2-devel librsvg2 librsvg2-devel libtool-ltdl-devel autotrace-devel fftw3-devel libtiff-devel giflib-devel libXt-devel xz-devel

The we’ll download all the rpms:

#ImageMagick

wget -rnd ftp://ftp.imagemagick.org/pub/ImageMagick/linux/CentOS/$(uname -i)/

#lifttw3 for CentOS 5 Only

if [ "$(cat /etc/redhat-release | cut -d' ' -f3 | cut -d'.' -f1)" == 5 ]

then

wget -rnd http://pkgs.repoforge.org/fftw3/fftw3-3.1.1-1.el5.rf.$(uname -i).rpm

wget -rnd http://pkgs.repoforge.org/fftw3/fftw3-devel-3.1.1-1.el5.rf.$(uname -i).rpm

fi

#And Djvulibre

wget http://pkgs.repoforge.org/djvulibre/djvulibre-devel-3.5.22-1.el5.rf.$(uname -i).rpm

wget http://pkgs.repoforge.org/djvulibre/djvulibre-3.5.22-1.el5.rf.$(uname -i).rpm

We need to copy libltdl to livltdl.so.3. You’ll get an error on Centos 5 most likely. You can ignore it if you do.

if [ "$(uname -i)" == "x86_64" ]

then

cp /usr/lib64/libltdl.so /usr/lib64/libltdl.so.3

else

cp /usr/lib/libltdl.so /usr/lib/libltdl.so.3

fi

Finally, install the RPMs…

rpm -Uvh *.rpm --nodeps --force

And verify!

[root@drop1 imagemagick]# convert --version

Version: ImageMagick 6.8.6-9 2013-08-25 Q16 http://www.imagemagick.org

Copyright: Copyright (C) 1999-2013 ImageMagick Studio LLCFeatures: DPC OpenMP

Delegates: bzlib cairo djvu fftw fontconfig freetype gslib jng jp2 jpeg lcms ltdl lzma openexr png ps png rsvg tiff webp wmf x xml zlib

You can also give it a test by creating a sample image from text:

convert -background 'rgb(3,27,5)' -fill white -font Helvetica-Bold -size 250x100 -pointsize 42 -gravity center label:'Linux Rulez!!' linux.gif

ImageMagick PHP Extension

You may also need to install the ImageMagick PHP Extension and its just as annoying. Lets get started, we need to create some folders and link some files:

mkdir -p $(MagickWand-config --prefix)/include/ImageMagick/

ln -s /usr/include/ImageMagick-6/wand $(MagickWand-config --prefix)/include/ImageMagick/wand

Download the file:

cd /root

wget http://pecl.php.net/get/imagick-3.1.0RC2.tgz

tar xzf imagick-3.1.0RC2.tgz

cd imagick-3.1.0RC2

And install:

phpize

./configure PKG_CONFIG_PATH=/usr/local/lib/pkgconfig/

make

make install

Add it to the php.ini:

echo "extension=imagick.so;" >> /usr/local/lib/php.ini

And verify!!

[root@drop1 ~]# php -i | grep imagick

imagick

imagick module => enabled

imagick module version => 3.1.0RC2

imagick classes => Imagick, ImagickDraw, ImagickPixel, ImagickPixelIterator

imagick.locale_fix => 0 => 0

imagick.progress_monitor => 0 => 0

July 17, 2013

If you’ve ever ran into the “/tmp/foobar does not exist or is not executable” after running “pecl install foobar” then its likely because /tmp is set to noexec in the fstab. Obviously removing the noexec flag and rebooting or remounting will fix the problem but generally the time required to do this and the fact that processes will need to be temporarily halted doesnt make this necessarily the best option. Lets do a quick little fix!

mkdir /root/tmp

pecl config-set temp_dir /root/tmp

If this yells at you and says it failed, try this:

pear config-set temp_dir /root/tmp

Then “pecl install foobar”!

July 14, 2013

So you’ve discovered that all of a sudden your server load has shot and your email inbox is getting filled up with hundreds of bounce backs. You sir may be spamming! Now if you are a spammer, this isnt really much of a shock.

But if you’re not a spammer you may be wondering what happened. Well odds are your website got hacked or your personal machine has a virus/malware. Now usually if your website gets hacked its because you are using an outdated version of your CMS software. Because wordpress doesn’t auto update and we don’t always login every day (especially if your wordpress just hosts a static website) it can be hard to keep up with the constant updates. And of course in doing so all the little hackers out there are now able to exploit whatever security holes you didn’t patch. On top of this it isn’t just the wordpress core we have to worry about, but also the plugins and the themes. Joomla and drupal and practically all other CMS’s follow the same logic. Keep your apps up to date and your chances of being compromised slim down quickly. What happens though when you are compromised? Usually the attacker places a php file on the server that acts as part of a ddos or a script that sends out a ton of spam. If your personal machine was compromised then whatever application you are running to connect to your email (such as outlook, thunderbird, etc) is usually used (or they just grab any IMAP/SMTP connection info) and use that to start spamming.

Ok, so we know that someone on the server is spamming. We don’t know if its a script or if its because someone’s personal machine got attacked. Lets take a look a couple one liners to help out with this. First, lets look at a command which searches for all external logins (meaning the personal local machine was compromised)

So this will exigrep through our mail log and return any line containing an @ (meaning pretty much everything) cut out the dovecot_login or courier_login (whichever one you use) and then sort it and count how many instances there are. In this case you can see that the email account [email protected] is sending much, much more than the other two email accounts it found. This doesn’t immediately mean that its a spamming account, it could be legitimate of course but it gets you on the right path.

Now lets look at a few one liners for checking which user/account has been hacked:

$ exigrep @ /var/log/exim_mainlog | grep U= | sed -n 's/.*U=\(.*\)S=.*/\1/p' | sort | uniq -c | sort -nr -k1

3 user1 P=local

74 user2 P=local

So here the user “user2” is sending the most email on the system so we know that this user is likely responsible for the spam. Lets see if we can track down the script!

grep "cwd=" /var/log/exim_mainlog | awk '{for(i=1;i<=10;i++){print $i}}' | sort |uniq -c| grep cwd | sort -n | grep /home/

Running this will look at any lines in the exam log that contains the “cwd” string. This should help narrow it down the folder where the spam is happening. But we can get even more specific! Note that this command doesn’t have as high of a success rate as the previous ones but when it works it saves so much headache.

grep X-PHP-Script /var/spool/exim/input/*/*-H | awk '{print $3}' | sort | uniq -c | sort -nr

Now unlike the others this actually searches the active email queue. So if you have hundreds or thousands of email queued up (and you can check this by running exam -bpc) this should work. It looks for the X-PHP-Script field in the header of the emails. This should be enabled by default in cpanel, if not it can be enabled in the whm. But anyways this should again sort and count exactly which script sent the email! Pretty cool, right!

So there you have it, if you are unfortunate enough to have a compromised system this will better help identify where the problem lies. And once you know, you can help fix it and safe guard yourself for the future. In addition to the wordpress and CMS tips above, you may want to look at even more security oriented plugins:

WP-Security

Ive seen this used quite a bit over the interwebs and although Ive always been on the problem side of it, it should give you a little extra security to prevent such attacks. For PC based compromises, you gotta have a virus scanner and a malware scanner, here are my favorites:

Windows Defender

MalwareBytes

People will argue that you use avast or avg over windows defender, personally I don’t think its necessary. Windows Defender generally does a good job at actively scanning your computer and catching anything that comes through. Its quiet, sits in the background, integrates great with windows and is completely free (and even free for businesses up to 10 computers)! The second is malwarebytes and Ive seen it personally catch more malware than any competitor. Its completely free but does have a paid option for more and worthwhile features.

“Is there anything else I can do?” Uh yeah! Stop them before they even get to your server! Thats where the following tools come into play:

6Scan

CloudFlare

6scan is a paid product and will scan and even fix many of the vulnerabilities you encounter. Rather than finding out your hacked by a visitor, a bounce back or the google malware page, 6scan will not only alert you but also help fix the issue! Cloudflare, in addition to speeding up your website through their CDN, it also includes a firewall to help block many of the known attackers out there. Oh, and its free with paid versions available for higher speed, better analytics and more finely tuned security settings.

Hopefully after you read this you know how to not only identify hacked accounts and spamming accounts but also know a few more steps to help prevent the same thing from happening in the future. I would love to hear about some of your stories or strategies so please leave them in the comments below!

March 23, 2013

Git is awesome, plain and simple! If you would like to install this on a cPanel and/or a CentOS based server you have 2 options: Installing from and RPM or installing from source.

RPM/Yum Based

You should just simply be able to do a yum install on the server and git will e pulled from the “updates” repo:

If you run into a dependency issue with perl-Git, check and make sure that perl is not entered into the excludes in /etc/yum.conf. Now one issue with simply pulling from the basic repo is that the version may not be as up to date as possible. On my server I pulled down version 1.7.1, however the latest stable version of git is 1.8.2. You may be able to find much more up to date versions in the EPEL repo or RPMForge. You can also install the latest available version straight from source:

Source Install

First off, you’ll want to make sure you have any and all dependencies taken care of:

yum -y install curl-devel expat-devel gettext-devel openssl-devel zlib-devel

Now lets grab the latest version and git (See what I did there?) to work!!

cd /usr/local/src/

wget https://github.com/git/git/archive/master.tar.gz

tar xzf master

cd git*

make prefix=/usr/local all

make prefix=/usr/local install

And thats it! Now feel free to learn git (http://try.github.com) and give it a shot!

root@server [~]# git --version

git version 1.8.2.GIT

February 28, 2013

Spamd on cpanel seems to always fail for me. I dont if its something special within my config or if its just a cpanel thing in general. Because I am a lazy admin I dont always bother with fixing things but jump to rebuilding them…this usually does the trick for me:

rpm -e `rpm -qa | grep spam`

/usr/local/cpanel/scripts/installspam --force

sa-learn -D --force-expire

sa-update -D

/usr/local/cpanel/scripts/spamassassin_dbm_cleaner

/usr/local/cpanel/scripts/fixspamassassinfailedupdate

/usr/local/cpanel/scripts/restartsrv_spamd

This just completely reinstalls spamd and cleans up any messes. Does the trick for me!

February 17, 2013

HAProxy is a very fast, very awesome and very free TCP/HTTP Load Balancer and Failover program. Its very simplistic but also quite powerful. The configuration is flexible enough to fit into many high traffic infrastructures but also simple enough to fit into any design seeking simple high availability. It can support tens of thousands of connections at high speeds and can even private a private to public address “translation” if you will by hiding web servers from the internet.

One of the most popular scenarios for HA Proxy is load balancing in its simplest form. A web server struggling to keep up with traffic demands would benefit by throwing the software in front of the web server, adding a second server and balancing between the two. This would effectively cut the load in half on any individual server and distribute it amongst the two, or however many you want, and should yield a much more stable web site.

However load balancing is only the beginning, because of the powerful nature of the config many webmasters have found that much of its value lies in its ability to be a “traffic cop” if you will. Imgur has a really interesting post on how they utilize the software, not for load balancing, but for directing any image requests to another server. Essentially, HA Proxy is grabbing any GET requests destined for any JPG, PNG or whatever and sending to a separate Lighttpd process and everything else goes to apache. It would be trivial to take this concept and use it to your advantage. Rather than writing any custom code to separate out certain elements you could simply write your requests in HA Proxy to rewrite your images, javascripts, css files, whatever to a CDN and send all traffic to a dedicated server. Its the flexibility and power that makes this a necessary piece of software for any linux fan to take hold of. There are dozens of other case uses (Conveniently located on their site) and quite a few more advanced features. For now, lets just get this software installed and setup a basic round robin config setup!

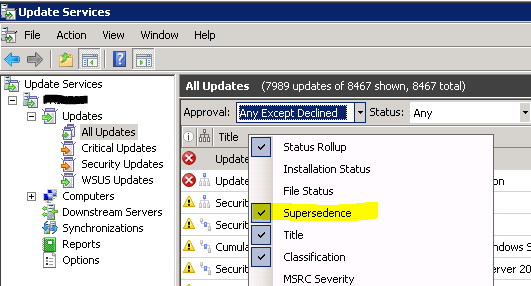

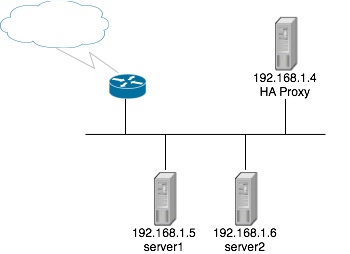

The Setup

The setup that we will be working with is populated with 1 HAProxy box and 2 web servers all on a single LAN. You can see the layout in this image:

All configuration will be done on the HAProxy machine and it is assumed that the web servers are already configured and displaying correctly. The HAProxy box I am using is running CentOS 5. Lets get started!

Installing

If you are using CentOS, you will need to enable the EPEL repo and then simply install it via yum:

Debian should have this package in the default repo:

sudo apt-get install haproxy

If you are like me and love installing things from source, first make sure you have gcc installed. If you’re on CentOS, I just like installing all of the development tools. If you are low on space or resources you may not want to install everything but its just easier in my opinion:

yum groupinstall "Development Tools"

The download and compile the program:

cd /usr/local/src/

wget http://haproxy.1wt.eu/download/1.4/src/haproxy-1.4.22.tar.gz

tar xzf haproxy-1.4.22.tar.gz

cd haproxy-1.4.22

make TARGET=linux26 #Change TARGET accordingly

cp haproxy /usr/sbin/

On the fifth line, make sure that you change the TARGET to your linux version. If you are unsure, run the following and record the first 2 numbers:

You can also append “ARCH=i386” to build the 32 bit binary if you are running on a 64 but system. HAProxy works on quite a few machines, you have the following options when it comes to the TARGET variable:

- linux22 for Linux 2.2

- linux24 for Linux 2.4 and above (default)

- linux24e for Linux 2.4 with support for a working epoll (> 0.21)

- linux26 for Linux 2.6 and above

- linux2628 for Linux 2.6.28 and above (enables splice and tproxy)

- solaris for Solaris 8 or 10 (others untested)

- freebsd for FreeBSD 5 to 8.0 (others untested)

- openbsd for OpenBSD 3.1 to 4.6 (others untested)

- aix52 for AIX 5.2

- cygwin for Cygwin

- generic for any other OS.

- custom to manually adjust every setting

You can launch the haproxy by running the following command:

haproxy -f /etc/haproxy/haproxy.cfg -sf `pgrep haproxy`

This can be ran multiple times without fear of spawning overlapping processes. The “-sf pgrep haproxy” will kill off the previous command if it exists and launch HAProxy using /etc/haproxy/haproxy.cfg as its config, which we will configure in the next section.

You can also create an init script from the file included:

cp examples/haproxy.init /etc/init.d/haproxy

chmod a+x /etc/init.d/haproxy

Note that this will not run until /etc/haproxy/haproxy.cfg is configured…so lets do that!

Build a Basic Config

We are going to assume that your config is held in /etc/haproxy/haproxy.cfg. Please note that if you installed this with a precompiled binary such as through a deb package or RPM this may be different. For us though, we need to first create the /etc/haproxy folder, once created touch a file called haproxy.cfg. Open the file with your favorite editor and paste the following:

defaults

log global

mode http

option httplog

option dontlognull

retries 3

option redispatch

maxconn 20000

contimeout 5000

clitimeout 50000

srvtimeout 50000

listen webservers 192.168.1.4:80

mode http

stats enable #Optional: Enables stats interface

stats uri /haproxy?stats #Optional: Statistics URL

stats realm Haproxy\ Statistics

stats auth haproxy:stats #Optional: username:password of site

balance roundrobin #balance type

cookie webserver insert indirect nocache

option httpclose

option forwardfor #Add X-ForwardFor in the header

server server1 192.168.1.5:80 cookie server1 weight 1 check

server server2 192.168.1.6:80 cookie server2 weight 1 check

Modify this if needed and save it to /etc/haproxy/haproxy.cfg. Once you’re finished we can actually check the syntax of this config file by running the following:

haproxy -f /etc/haproxy/haproxy.cfg -c

If its good, you should see “Configuration file is valid”. Go ahead and start everything up!

/etc/init.d/haproxy start

Go ahead and visit http://192.168.1.4 in your web browser and you should see the page from one of your two web servers! Pretty cool right?!

Config Commentary

I added a few comments to some of the lines within the config to help better explain what it does. Here is a little more info on some of those commands.

-

Stats Commands

- stats enable - Simply enables the statistics web interface

- stats uri /haproxy?stats - This defines the url where you can visit the stats. ex: http://192.168.1.4/haproxy?stats

- stats realm Haproxy\ Statistics - The site info in the authentication box

- stats auth haproxy:stats - The username and password of the url above. If this is removed the stats site will not require authentication

-

balance roundrobin - The method used to balance between the two servers. Here is a list of the more popular options below, the full list can be found in the documentation

- round robin - Each server is used in turns, according to their weights. This is the smoothest and fairest algorithm when the server’s processing time remains equally distributed.

- source - The source IP address is hashed and divided by the total weight of the running servers to designate which server will receive the request. This ensures that the same client IP address will always reach the same server as long as no server goes down or up. If the hash result changes due to the number of running servers changing, many clients will be directed to a different server.

- leastconn - The server with the lowest number of connections receives the connection. Round-robin is performed within groups of servers of the same load to ensure that all servers will be used. Use of this algorithm is recommended where very long sessions are expected, such as LDAP, SQL, TSE, etc… but is not very well suited for protocols using short sessions such as HTTP

-

server server1 192.168.1.5:80 cookie server1 weight 1 check - There are a lot of options you can use here. Lets break some down

- cookie [value] - Sets the name of the cooke to store on the client. In this case its named server1

- weight [int] - This, to put it simply, puts a specific value on the servers power/importance. In our scenario both are valued at 1 meaning that both are taken as equally powerful and thus should be equally distributed. The default is 1, the max is 256. The higher the number the more load on the server proportional to the values of the other servers.

- backup - If this value is added in the server line, it is disabled unless all non backup servers go down. This is good if you want to only use this as a failover.

- check - This enables health checks on the server. If this is not added a server will always be considered available even if it actually is not physically up.

- disabled - When added the server is marked as down in maintenance mode

- fall [int] - States how long to wait until the server is considered to be down. Default is 3

- rise [int] - States how long to wait until the server is backup. Default is 2

There are many more options and more explanations which can be found in the documentation.

What Next?

About that documentation, you can find it here. There are far too many options to detail here, this being a beginners guide. There are some more examples and commands that are pretty cool and should help you out!

- monitor-uri /haproxy - When this is enabled, visiting http://192.168.1.4/haproxy it simply provides a success 200 if the haproxy service is working

- option httpchk /check.html - Essentially its a much more powerful version of the “check” command. It checks the backend servers and checks /check.html. It its anything but a valid 200 response, it marks the server as down. You can take this a step further and create a php page that check for apache, mysql, server load, etc… and if any one of those is invalid it sends an error 500. This will take the server down until you can correct it.

ACL’s and Frontends

I wanted to wrap this up with another sample configuration. This one actually incorporates one of my favorite features and that’s front ends and ACL’s. Take a look ad this:

global

daemon

#maxconn 30000

#ulimit-n 655360

#chroot /home/haproxy

#uid 500

#gid 500

#nbproc 1

pidfile /var/run/haproxy.pid

log 127.0.0.1 local4 notice

defaults

mode http

clitimeout 600000 # maximum inactivity time on the client side

srvtimeout 600000 # maximum inactivity time on the server side

timeout connect 8000 # maximum time to wait for a connection attempt to a server to succeed

stats enable

stats auth admin:password

stats uri /admin?stats

stats refresh 5s

stats hide-version

stats realm "Loadbalancer stats"

#option httpchk GET /status

retries 5

option redispatch

option forwardfor

option httpclose

monitor-uri /test

balance roundrobin # each server is used in turns, according to assigned weight

default_backend default

frontend http

bind :80

acl nagios path_reg ^/nagios/?

acl app path_reg ^/app/?

acl mysite path_reg ^/blog/?

use_backend nagios if nagios

use_backend app if app

use_backend mysite if mysite

backend default

server web1 172.16.1.201:80 cookie A check inter 1000 rise 2 fall 5 maxqueue 50 weight 30

server web2 172.16.1.202:80 cookie B check inter 1000 rise 2 fall 5 maxqueue 50 weight 75

stats admin if TRUE

option httpchk HEAD /check.txt HTTP/1.0

errorfile 503 /var/www/html/503.html

backend nagios

server nagios 172.16.1.217:80 check inter 1000 rise 2 fall 5 maxqueue 50 weight 30

errorfile 503 /var/www/html/503.html

backend app

server clear 172.16.0.39:81 check inter 1000 rise 2 fall 5 maxqueue 50 weight 30

backend mysite

server mysite 172.16.1.203:80 check inter 1000 rise 2 fall 5 maxqueue 50 weight 30

The defaults section are a set of instructions that apply to all backend servers. Really useful if you have a few set of instructions that need to apply to everything. In the ACL section for “nagios” we see a regex value that matches /nagios. Then below it a command says that if the incoming request matches the regex value, then pass it to the “nagios” backend! So if someone hits http://192.168.1.4/nagios, HAProxy will display data from http://172.16.1.217/nagios! You can also match GET requests as stated in the beginning and match images, javascript files or whatever and use an ACL to route them wherever you want!

HAProxy’s config is really easy to use and it has a lot of value in a web stack. I hope this has given you at least the basics of HAProxy and that you have found some value in it. Enjoy!

February 09, 2013

InnoDB can be a complete pain to manage, troubleshoot and fix…but this is all wiped out by its impressive performance improvements as documented all over the place. Now simply switching to InnoDB on your tables should increase your performance but you can go even further by optimizing them to take advantage of some of the core features of InnoDB. Either way, switching should be your first step, lets get started!

Switching 1 Table

If your running a forum or other software that traffics a majority of its data out of 1 table, it may make sense just to change that 1 table rather than change the entire databse. As such, the following command should suite you just fine. Log into your mysql console and run the following:

ALTER TABLE table_name ENGINE=InnoDB;

Simple as that! You should of course always make backups before running this just in case. To verify the InnoDB tables in the system, log back into your mysql and run this:

SELECT table_schema, table_name FROM INFORMATION_SCHEMA.TABLES WHERE engine = 'innodb';

Switching 1 Database

Of course switching just 1 table wouldnt be enough, lets bring it up to 1 complete database. Having a table under InnoDB should yield some solid improvements, having an entire DB is even better. We have two options, a bash script and a mysqldump.

Bash Script

TABLE=db_name

echo "SET SQL_LOG_BIN = 0;" > /root/ConvertMyISAMToInnoDB.sql

mysql -A --skip-column-names -e"SELECT CONCAT('ALTER TABLE ',table_schema,'.',table_name,' ENGINE=InnoDB;') InnoDBConversionSQL FROM information_schema.tables WHERE engine='MyISAM' AND table_schema IN ('${TABLE}') ORDER BY (data_length+index_length)" > /root/ConvertMyISAMToInnoDB.sql

This will then output the appropriate mysql commands to alter all tables defined in the TABLE variable. The reason why this outputs it to a sql file rather than just runs the command is so that you have the ability to manually review it and ensure nothin wonky happened. To apply this, run the following:

mysql < /root/ConvertMyISAMToInnoDB.sql

MySQL Dump

Im just providing this so you have multiple options. Here is a way that you can do the same affect but you change everything over by dumping. This is useful if you want to duplicate the dump first for testing:

mysqldump [database] | sed -e 's/^) ENGINE=MyISAM/) ENGINE=InnoDB/' > [innodb-database.sql]

Changing Everything

You could change all of your tables over by doing a dump or multiple dumps and sedding out the content similar to the above, I just dont see a use in it. Here’s a shell script that performs similar functions to the above only it selects all myisam tables except those in information_schema, mysql or performance_schema:

echo "SET SQL_LOG_BIN = 0;" > /root/ConvertMyISAMToInnoDB.sql

mysql -A --skip-column-names -e"SELECT CONCAT('ALTER TABLE ',table_schema,'.',table_name,' ENGINE=InnoDB;') InnoDBConversionSQL FROM information_schema.tables WHERE engine='MyISAM' AND table_schema NOT IN ('information_schema','mysql','performance_schema') ORDER BY (data_length+index_length)" > /root/ConvertMyISAMToInnoDB.sql

less /root/ConvertMyISAMToInnoDB.sql

Then verify and apply it with the following:

mysql < /root/ConvertMyISAMToInnoDB.sql

And that does it! Enjoy the wonderful performance improvements that is InnoDB.

February 02, 2013

There are quite a few times where troubleshooting slow page load times can well…be troublesome. Now this doesnt work every time and unless you are very well tuned to all the system calls of strace (If so, why are you reading this?), it may not even be useful. However, this command has completely solved my issue on a number of occasions:

strace -t -f -o strace.txt /usr/bin/php index.php

If you open strace .txt, on the left hand side you will see a time field. If you open strace.txt file using less or your favorite file editor, you will notice 3+ columns:

12521 13:59:33 open("/opt/curlssl//lib64/libstdc++.so.6", O_RDONLY) = -1 ENOENT (No such file or directory)

The first column is the PID of the actual PHP command, the second is the time at which it ran and the third and fourth are the system calls and potential errors such as a file not being found in this case. If you see page just spin and spin and spin, check the time and see if there is one command that is slowing down everything. I made a little test to demonstrate this. Take a look at the following PHP script:

<?php

$fp = fsockopen("www.example.com", 8080, $errno, $errstr, 30);

if (!$fp) {

echo "$errstr ($errno)<br />\n";

} else {

$out = "GET / HTTP/1.1\r\n";

$out .= "Host: www.example.com\r\n";

$out .= "Connection: Close\r\n\r\n";

fwrite($fp, $out);

while (!feof($fp)) {

echo fgets($fp, 128);

}

fclose($fp);

}

?>

This script will try and hit http://example.com and make sure it can connect to port 8080. Now this port is closed so it will fail and timeout. The timeout is whats going to get us here. The script cant complete and move past this time unless the script times out or the remote server send us something. Lets take a look at the strace:

24281 16:05:50 fcntl(3, F_GETFL) = 0x2 (flags O_RDWR)

24281 16:05:50 fcntl(3, F_SETFL, O_RDWR|O_NONBLOCK) = 0

24281 16:05:50 connect(3, {sa_family=AF_INET, sin_port=htons(8080), sin_addr=inet_addr("192.0.43.10")}, 16) = -1 EINPROGRESS (Operation now in progress)

24281 16:05:50 poll([{fd=3, events=POLLIN|POLLOUT|POLLERR|POLLHUP}], 1, 30000) = 0 (Timeout)

24281 16:06:20 fcntl(3, F_SETFL, O_RDWR) = 0

24281 16:06:20 close(3) = 0

If you look soley in thesecond column, you will see a big jump in time of 30 seconds for this one command. Taking a closer look at the commands themselves you can see sin_addr=inet_addr(“192.0.43.10”) and the port sin_port=htons(8080) seem to be the likely culprit. From there you can find out if the port is open in your firewall or theires and if all else fails using a clever hack to work around it!

January 30, 2013

If you’re troubleshooting an issue on your server and you suck at taking documention, you can simply pipe the SSH output into a file for review later:

ssh root@server.com | tee -a /path/to/file

What is really cool about this method (As opposed to just saving the history) is that it will also save the result of the command.

Last login: Wed Jan 30 10:19:44 2013

root@hydra [~]# echo "Hello"

Hello

root@hydra [~]# logout

You can also automate this. Create a file in your ~/bin/ directory called sshlog or similar and input the following code:

ssh $@ | tee -a /path/to/file

Set the execution bits and you can now run sshlog root@server and any other flags you would normally run with SSH and it will be appended to your file (as defined by the -a flag in tee). If you want this to always run when you kick of ssh, simply create an alias:

January 29, 2013

If you have ever experienced this error and you are positive that mysqli is installed, the issue is probably because you need mysqlnd. Checkout the following code snippet:

<?php

$conn = new mysqli($servername, $username, $password, $database, $port);

$r = $conn->query("SELECT * FROM `dummy`", MYSQLI_STORE_RESULT);

$arr = FALSE;

if($r !== FALSE)

{

echo "<br />Vardump:<br/>";

var_dump($r);

echo "<br /><br />Checking if methods exist:<br/>";

echo "<br />fetch_assoc() method exists? ";

var_dump(method_exists($r, 'fetch_assoc'));

echo "<br />fetch_all() method exists? ";

var_dump(method_exists($r, 'fetch_all'));

echo "<br />";

$arr = $r->fetch_all(MYSQLI_ASSOC);

$r->free();

}

print_r($arr);

When running this, you may encounter the following error:

fetch_assoc() method exists? bool(true)

fetch_all() method exists? bool(false)

The fetch_all() method within mysqli actually relies on mysqlnd being installed at compile time. If you are using CentOS, atomicorp has rpm’s within their repo that should install this:

http://www6.atomicorp.com/channels/atomic/centos/6/i386/RPMS/

Debian can be found here:

http://packages.debian.org/sid/php5-mysqlnd

If you are running cPanel, add this:

To this:

/var/cpanel/easy/apache/rawopts/all_php5

And then run easyapache:

/scripts/easyapache --build

Now everytime you need to recompile, that flag will always be tacked on. Enjoy!

January 28, 2013

I receive a lot of questions and concerns from developers stating that the phpmail function is not correctly working. The problem is that 9 times out of 10 it really is installed and working and the issue usally comes from a coding error or user error (thats the thing between the keyboard and the chair). One of my core philosophies when troubleshooting issues is to break it down in its most basic form. So to verify whether or not its actually working, just create blank php page and insert the following:

<?php

$to = "[email protected]";

$subject = "Email works!";

$message = "Huzzah!";

$from = "root";

$headers = "From:" . $from;

mail($to,$subject,$message,$headers);

echo "Mail Sent.";

?>

This is as basic as it gets. Really good for verifying whether or not the issue lies with your server, or you! Just run it with the following:

If all goes well you should now be receiving an email directly to your inbox. If not, then I would start by looking at your php.ini and seeing if it is disabled within the functions. You will also want to ensure that you actually have a valid MTA such as sendmail or exim and that it is indeed working and accepting connections through localhost (Or as defined by your php.ini). The mail function is built into the PHP core so there really isnt any extra enabling that needs to be happen here. If you are still having trouble, I would reccomend hopping on the IRC or Forums for your respective distro!

January 28, 2013

This is my very first post! Who has two thumbs and is excited!

Blue square on top: this update supersedes another update, these updates you do not want to clean…!!

Blue square on top: this update supersedes another update, these updates you do not want to clean…!! Blue square in the middle: this update has been superseded by another update and superseded another update as well, this is an example of an update you may want to clean (decline).

Blue square in the middle: this update has been superseded by another update and superseded another update as well, this is an example of an update you may want to clean (decline). Blue square in the right below corner: this update has been superseded by another update, this is an example of an update you may want to clean (decline).

Blue square in the right below corner: this update has been superseded by another update, this is an example of an update you may want to clean (decline).